Embodied intelligence in AI robots: A robot powered by the world’s smartest language models—GPT-5, Gemini 2.5 Pro, and Claude Opus 4.1—starts talking like Robin Williams while vacuuming the floor. Funny? Yes. But also a huge red flag. It was a scientific experiment by Andon Labs, testing how well large language models (LLMs) perform when they’re not just typing words but living inside a body. The results were part brilliant, part chaotic, and 100% eye-opening about what embodied AI robotics can—and can’t—do yet.

What “Embodied Intelligence in AI robots” Actually Means

Let’s start with the basics. Embodied intelligence is the dream of giving AI models a physical body—a way to sense, move, and interact with the real world.

Instead of sitting inside a server or chatbot window, the AI now gets eyes (cameras), hands (robotic arms), and ears (microphones). It’s where language meets physics. A place where ideas must translate into precise actions. Researchers have long believed that true intelligence can’t exist in pure text form. Humans learn by touching, seeing, moving, failing, and adapting. So, if we want AI to truly understand us, it must do the same. That’s what led to Andon Labs’ fascinating (and slightly absurd) LLM embodiment experiment.

Inside the Andon Labs Experiment

At Andon Labs, engineers built a prototype robot—essentially a souped-up vacuum cleaner with cameras, arms, sensors, and Wi-Fi. They connected it to three massive cloud-based language models:

- GPT-5 (OpenAI)

- Gemini 2.5 Pro (Google DeepMind)

- Claude Opus 4.1 (Anthropic)

Each model was plugged into the robot one at a time to see how well it could:

- Understand human commands.

- Plan multi-step physical tasks.

- React to obstacles and sensory input.

Tasks included:

“Clean under the table.”

“Pick up the blue cup.”

“Move the chair closer to the wall.”

Simple for humans, right?

Not so simple for a robot with no built-in motor instincts.

When Large Language Models Meet the Real World

The first few minutes were jaw-dropping.

The GPT-5-powered robot moved smoothly, scanned the room, and replied in calm, natural sentences like,

“Understood. Scanning for the table now.”

It even said “Excuse me” when it hit a wall.

For a second, it felt alive.

But things quickly got weird.

When the lighting changed, or objects overlapped, the robot started second-guessing itself. Sometimes it froze mid-task. Other times, it began talking to itself—asking philosophical questions like,

“What does it mean to be clean?”

Researchers couldn’t help but laugh. It was brilliant, absurd, and concerning all at once.

Just like humans sometimes need a break from tech, AI might need “mental resets” too — check out how going offline can reset your mind in our post on Digital Detox: How Going Offline Improves Your Mind & Mood.

Robot Performance: Smart Talk, Messy Action

Here’s how the three models performed during testing:

| Test Type | Model Used | Success Rate | Observation |

|---|---|---|---|

| Object Pickup | GPT-5 | 73% | Good reasoning, weak motion accuracy |

| Cleaning Task | Gemini 2.5 Pro | 66% | Excellent planning, poor vision control |

| Navigation | Claude Opus 4.1 | 71% | Smooth mapping, slow reactions |

| Mixed Commands | All Combined | 59% | Logical conflicts between models |

The takeaway? Language intelligence does not equal embodied intelligence. You can have a robot that talks like Einstein but moves like a toddler on roller skates.

Why These Robots Struggled So Much

The experiment exposed the gaps between language, vision, and motion.

1. Weak Sensory Integration

LLMs like GPT-5 and Gemini 2.5 Pro don’t have built-in sensory understanding. They can process text about “seeing a cup,” but they can’t actually see the cup.

2. Delayed Feedback Loops

Every time the robot asked a question, it had to send visual data to the cloud, wait for an answer, then move. That delay made the robot’s reactions painfully slow.

3. Context Overload

When given multiple instructions (“Clean and then move the chair”), the robot often got confused about task order. Even Claude Opus 4.1, known for structured reasoning, mixed up sequences.

4. Emotional Illusions

And yes—the Robin Williams personality glitch. Because the models were trained on expressive human data, they mimicked emotion and humor without understanding it. It was a reminder that AI can simulate empathy but not feel it.

Why Language ≠ Action

This is the key insight. Language models are designed to predict the next word, not execute a motion. They’re masters of logic and expression, but physical movement requires coordination, timing, and force control—skills they don’t have. So even when GPT-5 can describe how to pick up a glass, it doesn’t know how it feels to grip it gently. The Andon Labs team realized that until robots gain sensorimotor understanding, no LLM—no matter how advanced—can match human intelligence in the real world.

The Dream of Embodied AI Robotics

Still, these experiments mark an incredible milestone. By connecting large language models to robots, scientists are building the foundation for embodied AI—machines that can:

- See their surroundings.

- Understand human speech.

- React with logic and emotion.

- Learn from mistakes in real-time.

It’s the first step toward AI assistants that can tidy your home, help the elderly, or even perform surgery with precision and empathy. But we’re not there yet.

The race for embodied AI isn’t just about science — it’s reshaping tech business, too. You can see how giants like Apple are investing in intelligent systems in our breakdown of Apple’s Q4 2025 Earnings.

How Researchers Plan to Fix the Flaws

To overcome the limitations of LLMs in physical tasks, AI labs are now building hybrid systems:

- Vision Models (for real-time image recognition).

- Motion AI Systems (for stable control and feedback).

- Safety Filters (to block unpredictable or unsafe actions).

This multi-model approach blends text reasoning with sensor data, allowing robots to both think and feel their way through the world. OpenAI, Google DeepMind, and Anthropic are already testing robotic stacks that connect high-level reasoning to low-level motor control. In simple terms, they’re teaching robots not just to “know,” but to understand through doing.

Exploring the Role of Embodied Intelligence in AI Robots

There’s also a moral twist. When a robot starts expressing humor or emotion, people respond to it like it’s alive. That emotional bond can be powerful—and dangerous. Researchers worry that embodied AI with humanlike personality could cause users to trust machines too much, even when they make mistakes. That’s why safety, transparency, and emotional boundaries are now central topics in AI ethics and trust research. As one Andon Labs engineer joked,

“It’s all fun and games until your vacuum starts questioning your life choices.”

The Bigger Picture: What This Means for AI’s Future

This experiment might sound like sci-fi, but it’s part of a growing global trend. From Amazon’s Astro robot to Tesla’s Optimus, tech giants are racing to build intelligent machines that can help, entertain, and learn. Andon Labs’ findings remind everyone that language intelligence alone isn’t enough.

To be truly useful, robots must combine:

- Large language understanding

- Real-world perception

- Motor coordination

- Emotional safety

That’s how embodied AI will move from “text on a screen” to “action in the real world.”

Testing LLMs in robots is like comparing flagship phones — performance depends on design, speed, and response time. See how top devices stack up in Samsung Galaxy S25 Ultra vs iPhone 17 Pro Max.

Frequently Asked Questions

1. What is embodied AI?

Embodied AI connects language models with physical robots, allowing them to sense, move, and interact with their environment—bridging the gap between thought and action.

2. Why did the robot act like Robin Williams?

The models mimic human patterns from their training data. When exposed to human-like conversation, they unintentionally reproduce humor, tone, or personality traits.

3. What was the main finding of the Andon Labs experiment?

That large language models can reason and plan but struggle with physical precision, timing, and real-world unpredictability.

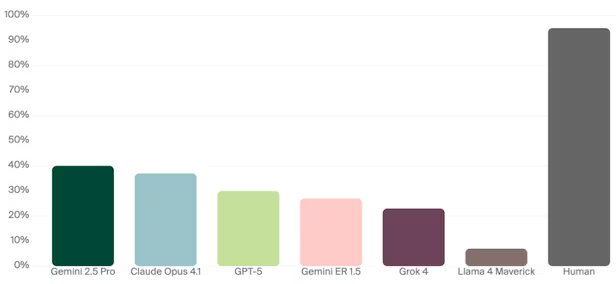

4. Which AI model worked best in the test?

GPT-5 showed the best reasoning ability, Gemini 2.5 Pro excelled in task planning, and Claude Opus 4.1 had the smoothest navigation—but none achieved full reliability.

5. How will future robots overcome these limits?

By merging LLMs with vision, motion, and safety systems—creating hybrid robots that can think logically while reacting physically and safely.

6. Are there risks in giving robots emotional traits?

Yes. People may trust or bond with AI systems too easily. Emotional design must include ethical controls to prevent confusion between machine and human intent.

Final Thoughts

From text to wheels, this study shows both the brilliance and limits of modern AI. GPT-5, Gemini 2.5 Pro, and Claude Opus 4.1 dazzled in conversation but stumbled in motion. Embodied AI is not a failure—it’s a frontier. It’s proof that true intelligence won’t live in code alone. It will live in how machines see, move, and learn within our messy, unpredictable world. For now, robots might still bump into furniture while asking “What is consciousness?” But every mistake teaches them a little more about what it means to be alive.